Meet IBM’s new family of AI models for materials discovery

The open-source foundation models for chemistry are aimed at accelerating the search for new, more sustainable materials with applications in chip fabrication, clean energy, and consumer packaging.

The U.S. Environmental Protection Agency tracks the release of nearly 800 toxic substances that companies would readily phase out if greener, high-performing alternatives could be found. Now, AI has the potential to give scientists powerful new tools to do just that, by uncovering new materials that could be safer for humans and the environment.

Foundation models pre-trained on vast molecular databases can be used to screen millions of molecules at a time for desirable properties while weeding out the ones with dangerous side-effects. These models can also be used to generate molecules entirely new to nature, circumventing the traditional drawn-out, trial-and-error-based discovery process.

In recent months, IBM Research has released a new family of open-source foundation models on GitHub and Hugging Face. Anyone with a small amount of data can customize the models for their own applications, whether it’s a search for better battery materials to store power from the sun and wind or for replacements for the toxic PFAS ‘forever’ chemicals found in everything from nonstick pans to the chips inside your laptop or phone. In addition to the models, IBM has devised several methods for fusing together different molecular representations.

The models can be used alone or together, and in a few short months, they have been downloaded more than 100,000 times. “We’re encouraged by the strong interest we’ve seen so far,” says Seiji Takeda, a principal scientist co-leading IBM Research’s foundation models for materials (FM4M) project. “We are excited to see what the community comes up with.”

Machine-readable molecules

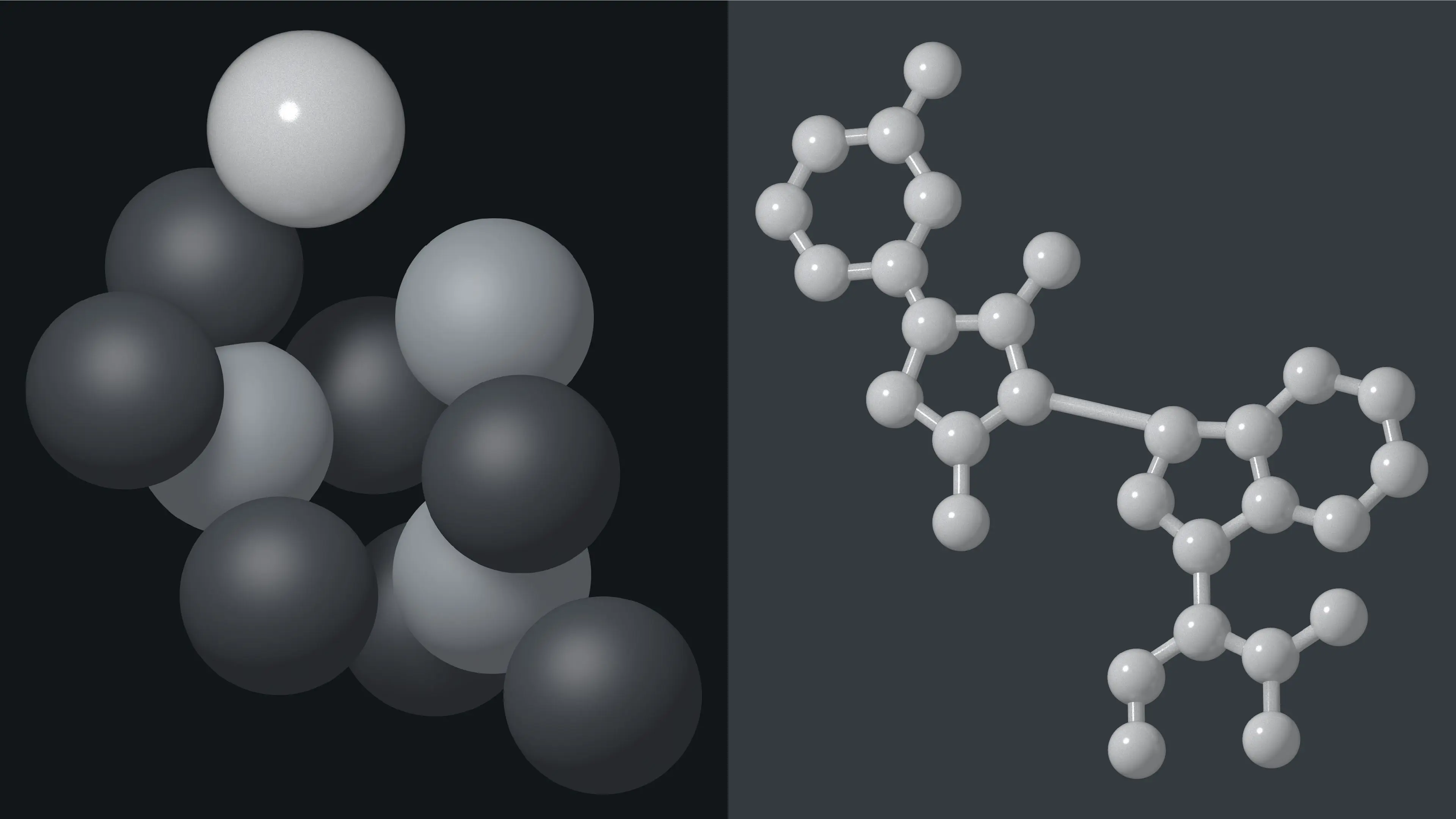

Unlike the words that many large language models concern themselves with, molecules exist in three dimensions, and their physical structure strongly shapes their behavior. One of the great challenges in applying AI to chemistry hinges on how to represent molecular structures in a way that computers can effectively analyze and manipulate.

Various representation styles have emerged over the years. Molecule structures can be summarized in natural language, as SMILES and SELFIES strings of text; as molecular graphs with atom “nodes” and bond “edges”; as numerical values describing the relative strength of their physical properties; and as spectrograms, which are captured through microscopes, and show how a target molecule interacts with light.

Each format has its strengths — and its limitations — when it comes to applying AI to classification and prediction tasks. The SMILES dataset, with some 1.1 billion molecules represented in text strings, is the world’s largest. But because SMILES strings reduce 3D molecules to lines of text, valuable structural information can get lost, causing AI models to generate invalid molecules. An alternative but related format, SELFIES, offers a more robust and flexible grammar for representing valid molecules, however, similar to other text representations, it also lacks 3D information.

Molecular graphs, by contrast, capture the spatial arrangement of atoms and their bonds, but this detail comes at a heavy computational cost. Data gathered in experiments and simulations can also be highly informative, but they, too, have drawbacks: Experimental data used for training AI models in chemistry may be incomplete or contain mistakes. For example, measurements of how molecules interact with the electromagnetic spectrum may only include analyses for visible light and leave out infrared or UV, giving an AI model a skewed view of the world.

IBM researchers debated the pros and cons of each representation as they mapped out their plan for building a foundation model for materials. They ultimately pre-trained each model, with its unique modality, independently.

SMILES-TED and SELFIES-TED (short for “transformer encoder-decoder”) were pre-trained on 91 million SMILES, and 1 billion SELFIES, validated samples respectively, from the PubChem and Zinc-22 databases. MHG-GED (short for “molecular hypergraph grammar with graph-based encoder-decoder”) was pre-trained on 1.4 million SMILES-based graphs which included their atomic number and charge.

Calling multiple experts

An AI architecture known as mixture of experts, or MoE, has become a popular way to serve large models more efficiently by using a router to selectively activate a subset of the model’s weights for different tasks. An MoE takes the user’s incoming query and hands it off to a routing algorithm that decides which ‘experts’ are best suited for the job.

IBM researchers used the MoE concept to fuse together the complementary strengths of their SMILES, SELFIES, and molecular graph-based models. In a recent study at the 2024 NeurIPS conference in Vancouver, they showed that by combining the embeddings of the three data modalities in a “multi-view” MoE architecture, they could outperform other leading molecular foundation models built on just one modality.

They tested their MoE on MoleculeNet, a benchmark created at Stanford that mirrors some of the tasks commonly used in drug and materials discovery. The benchmark includes a wide variety of both classification tasks, which can include predicting whether a given molecule is toxic; and regression tasks, which can include predicting how soluble a given molecule is in water. Researchers found that their multi-view MoE outperformed other leading models on both styles of task.

The MoE approach also provides insights into which data representations pair best with which types of tasks. Researchers discovered, for example, that their MoE favored the SMILES and SELFIES-based models in some types of tasks, while calling on all three modalities evenly in others, showing that the graph-based model added predictive value on certain problems.

“This expert activation pattern suggests that an MoE can effectively adapt to specific tasks for improved performance,” says Emilio Vital Brazil, a senior scientist at IBM Research co-leading the project.

What’s next

IBM researchers will demo the foundation models and their capabilities at the upcoming Association for the Advancement of Artificial Intelligence (AAAI) conference in February. In the coming year, they plan to release new fusion techniques and models built on additional data modalities, including the positioning of atoms in 3D space.

Through the AI Alliance, IBM is also collaborating with other researchers in academia and industry to accelerate the discovery of safer, more sustainable materials. This spring, IBM and the Japanese materials company, JSR, launched a working group for materials (WG4M) that has drawn about 20 corporate and academic partners so far.

The group is focused on developing new foundation models, datasets, and benchmarks that can be applied to problems ranging from resusable plastics to materials needed to support renewable energy. "There's no time to waste," says Dave Braines, the CTO of emerging technology at IBM Research UK. "New, more sustainable materials are needed in virtually every industry, from semi manufacturing to clean energy. AI now gives us the power to multiply our creativity."

Related posts

- Q & AKim Martineau

LLMs have model cards. Now, benchmarks do, too

ReleaseKim MartineauThe quest to teach LLMs how to count

ResearchKim MartineauIBM Granite 4.0: Hyper-efficient, high performance hybrid models for India

Technical noteRudra Murthy, Rameswar Panda, Jaydeep Sen, and Amith Singhee