IBM welcomes CERN as a new hub in the IBM Quantum Network

Particle physics is at the core of our understanding of the fundamental laws governing Nature. To shed light on the sub-atomic scale, humans engineered powerful particle accelerators that can disclose the fundamental laws governing the universe.

The new generations of particle accelerators at the European Organization for Nuclear Research (CERN) near Geneva produce data at a pace far exceeding the storage and elaboration capabilities of the most powerful supercomputers on Earth.

Quantum computing can help—and now that CERN openlab’s current Quantum Technology Initiative comprises dozens of projects across computing, sensing, communication, and theory. Read the case study.CERN has become the newest IBM Quantum Hub, the the CERN-IBM relationship will be closer than ever before.

Until now, scientists have been using classical machine learning techniques to analyze raw data captured by the particle detectors, automatically selecting the best candidate events. But we think we can greatly improve this screening process—by boosting machine learning with quantum. In particular, exploiting the exponentially large qubit A Hilbert space can be thought of as the state space in which all quantum state vectors “live.” The main difference between a Hilbert space and any random vector space is that a Hilbert space is equipped with an inner product, which is an operation that can be performed between two vectors, returning a scalar. Read more about linear algebra in the Qiskit TextbookHilbert space, quantum computers should be able to capture quantum correlations in the particle collision datasets more efficiently and accurately than conventional, classical, machine learning algorithms. This ability should lead to a better interpretation of experiments.

In our recent pre-print1 on arXiv, we detail the use of a so-called quantum support vector machine (QSVM) within this context. It’s a quantum algorithm that allows the classification of data in categories of interest, and can distinguish, for instance, among collisions that produce or do not produce the Higgs boson. Theorized in 1964, and discovered at CERN in 2012, the Higgs boson is an elementary particle that gives all other fundamental particles mass, according to the Standard Model of particle physics.

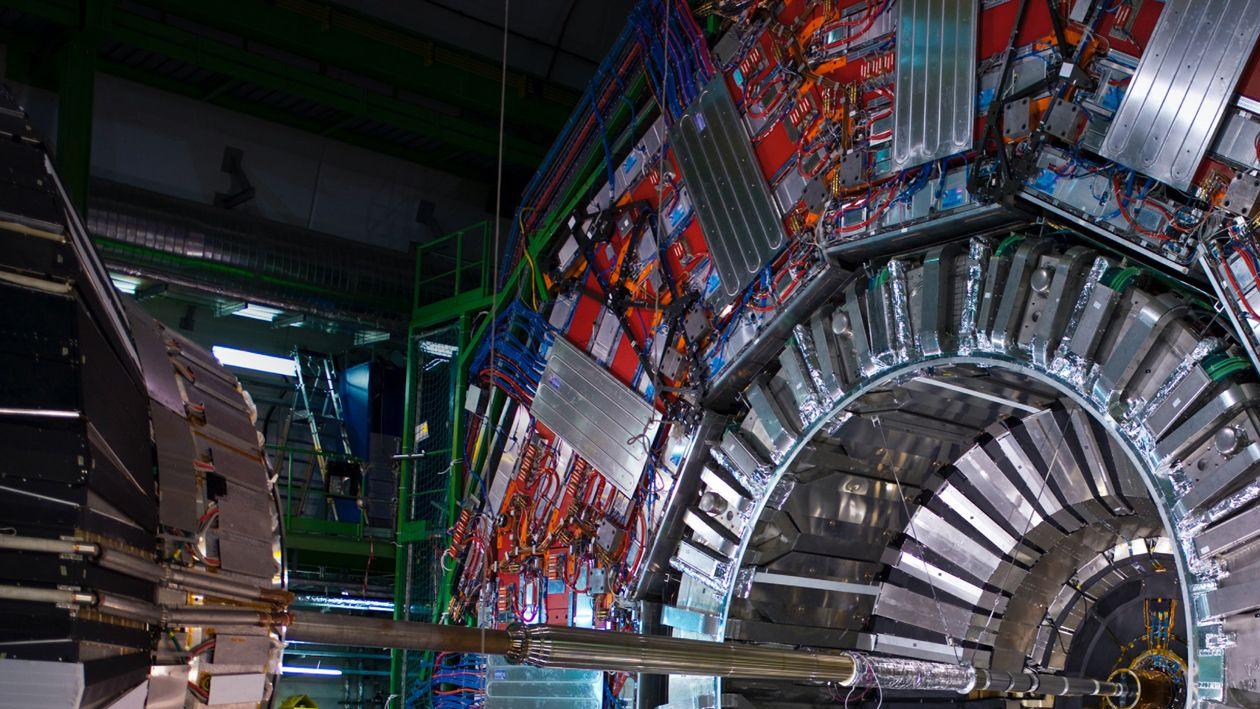

In the paper, we use quantum algorithms to classify the data associated with the Higgs boson production, generated at the ATLAS detector of the Large Hadron Collider at CERN.

The aim of our experiment was to identify specific processes where it’s possible to directly observe Higgs’ boson production associated to top-quark pairs (the heaviest known fundamental particle). The low production rate of this particular process makes this observation challenging and the use of machine learning techniques allows for a significantly better classification between signal and background noise.

We show that, under the same data preparation conditions, quantum support vector machines (with quantum kernel estimator) are performing at least just as well as the best optimized classical classifiers used at CERN openlab.

We have also obtained a similar performance with IBM quantum computers for a properly rescaled dataset to fit in a 15-qubit register of a 27-qubit device. The quality of our results points towards a possible demonstration of a quantum advantage for data classification with quantum support vector machines in the near future.

Learn more about the use cases for high-energy physics.

Quantum vs. classical classifiers

To do the measurements, we developed a way to compress the experimental data collected at the LHC—for instance the energies and the momenta of the colliding and emerging sub-atomic particles. The aim of the technique has been to efficiently inject the necessary data into the 15-qubit register of one of our premium quantum computers with a 27-qubit Falcon processor.

To set an accurate benchmark for our technique, we used the same processed dataset for the state-of-the-art classical machine learning calculations. Next, we prepared the quantum circuit for the read-in of the data and the generation of the quantum kernel to enable the measurement of the “distance” between different events. Based on that, we wanted to classify the events that took place through the production of a Higgs particle and distinguish them from all other possible events.

We then trained the quantum algorithm using a test dataset, enabling it to classify collision events accurately. We found that when the algorithm is simulated classically, the performance of the quantum algorithm is comparable—if not superior—to the best-known classical classifiers used routinely at CERN. This observation shows the potential for quantum advantage of the QSVM in challenging and highly interesting physics problems.

Finally, we tested the validity of our quantum algorithm on hardware using a 15-qubit experiment on IBM Quantum devices. The analysis revealed that, despite the “noise” affecting quantum calculations, the quality of the classification remained very high—comparable to the best classical simulation results. This once again confirms the potential of the quantum algorithm for this class of problems.

Today, quantum computers are still noisy, and the quantum states stored in the qubits are fragile with a lifetime of just a few microseconds, not enough to perform long and complex calculations. Still, our results show that quantum machine learning algorithm for data classification can be as accurate as classical ones on noisy quantum computers—paving the way to the demonstration of a quantum advantage in the near future.

We’ve also noticed that under certain conditions the noise of quantum computers can even work in our favor by improving the convergence of the algorithm. Still, a clear demonstration of the advantage of the quantum support vector machine will become possible only with larger quantum computers with reduced noise levels (larger quantum volume), or when error-corrected (fault tolerant) quantum computers will become a reality.

Our results show that quantum computers can significantly improve the interpretation of the physical data of particle accelerators. In addition to a potentially faster and more accurate classification of the monitored events, we hope that one day, quantum computers could even help in the search for exciting “new physics” beyond the well-known Standard Model of particle physics.

CERN: The new IBM Quantum Hub

In June, CERN became IBM’s newest quantum hub. The mission of the CERN hub is to explore promising use cases for high-energy physics with academia and research. It is structured as a hub and bespoke model of engagement, and hub members can be academic or research institutes. The move gives CERN its own dedicated access to IBM’s Quantum fleet of more than 20 quantum computers.

Other IBM Quantum Hubs include Keio University in Japan, Sungkyunkwan University (SKKU) in South Korea, University of Melbourne in Australia, Université of Sherbrooke in Canada, Bundeswehr University in Germany, National Taiwan University and University of Minho in Portugal. In the future, the hubs are likely to be sharing tools, experiences, and best practices to boost the rapidly emerging field of quantum information science.

Replay: CERN & IBM Research exploring quantum computing for high-energy physics

Watch a replay of a discussion between CERN openlab's Alberto Di Meglio, and IBM's Ivano Tavernelli about recently published high-energy physics research: Exploring quantum computing for high-energy physics

Notes

- Note 1: CERN openlab’s current Quantum Technology Initiative comprises dozens of projects across computing, sensing, communication, and theory. Read the case study. ↩︎

- Note 2: A Hilbert space can be thought of as the state space in which all quantum state vectors “live.” The main difference between a Hilbert space and any random vector space is that a Hilbert space is equipped with an inner product, which is an operation that can be performed between two vectors, returning a scalar. Read more about linear algebra in the Qiskit Textbook ↩︎

References

-

Wu, S., Sun, S., Guan, W., et al. Application of Quantum Machine Learning using the Quantum Kernel Algorithm on High Energy Physics Analysis at the LHC. arXiv. (2021) ↩

Related posts

- ExplainerRyan Mandelbaum and Jerry Chow

IBM Research Ireland moves to the heart of Dublin with a new lab at Trinity College Dublin

NewsMike MurphyWhat’s next in computing is generative and quantum

NewsPeter HessTransitioning to quantum-safe communication: Adding Q-safe preference to OpenSSL TLSv1.3

Technical noteMartin Schmatz and David Kelsey